Einführung in die Constraint-basierte formale Verifikation von neuronalen Netzen

Künstliche Intelligenz (KI) hat sich bis heute bereits in vielen Bereichen als nützlich erwiesen und ist in einigen Bereichen, wie Computer Vision, sogar unverzichtbar geworden. In sicherheitskritischen Bereichen gibt es beim Einsatz von KI jedoch vieles zu beachten. Um einen ersten Eindruck davon zu vermitteln, zeigen die folgenden Bilder einige schwer erkennbare Verkehrszeichen aus einer gewöhnlichen deutschen Straßenszene. In den leicht variierten Bildern dieser Szenen wird es sogar fast unmöglich einige der Verkehrszeichen zu erkennen. Wir wollen überprüfen, ob ein solches unerwünschtes Verhalten, wie das nicht korrekte Erkennen von Verkehrszeichen, innerhalb eines vernünftigen Rahmens von Beleuchtungsbedingungen ausgeschlossen werden kann.

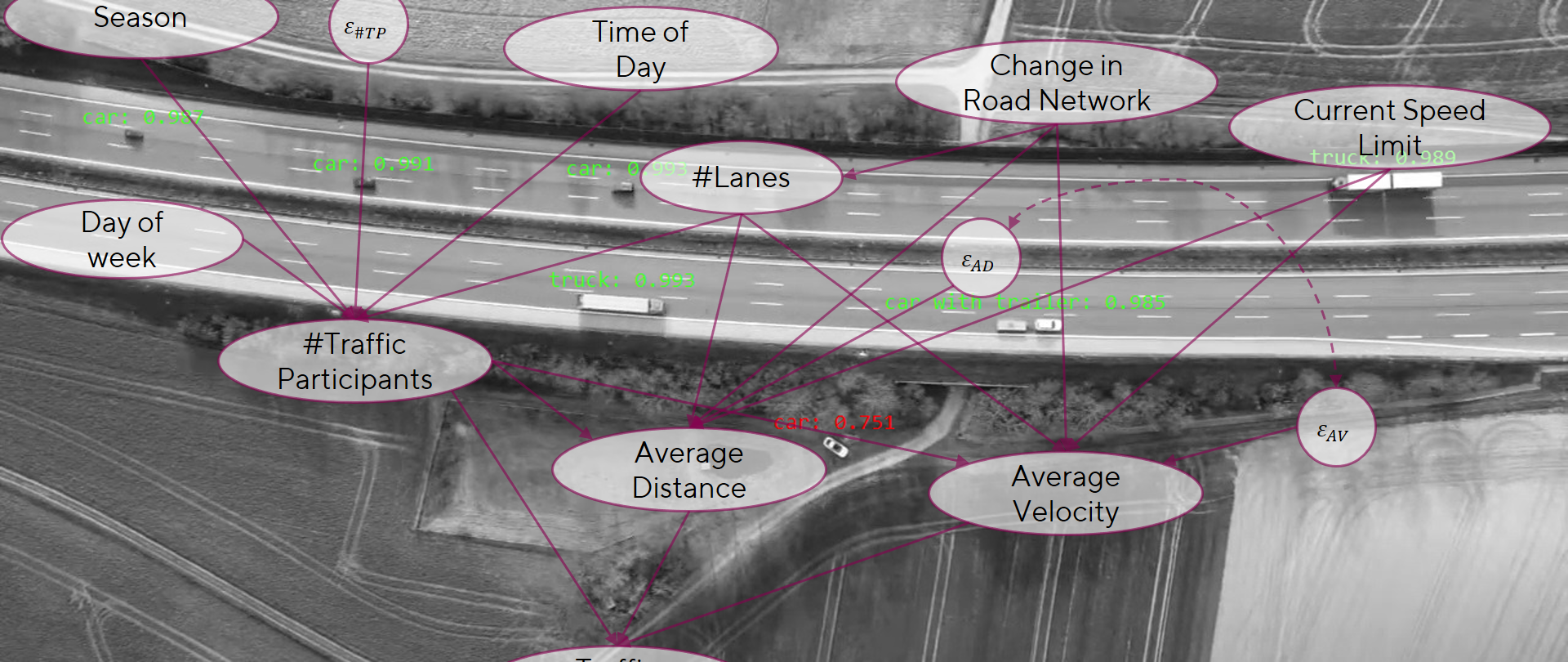

Während in unseren Alltag die Digitalisierung immer weiter voranschreitet, verlassen wir uns auch zunehmend auf Software, die selbst bei den schwierigsten Aufgaben einwandfrei funktionieren soll. Idealerweise wird dieses Vertrauen durch Beweise untermauert. Für Software gibt es viele bewährte Ansätze, um das beabsichtigte und korrekte Verhalten von Algorithmen nachzuweisen. Dies ist für diverse Anwendungsbereiche notwendig und für sicherheitskritische Anwendungen, wie das autonome Fahren, sogar zwingend erforderlich. Bei viele Anwendungsfälle basieren die State-of-the-Art-Ansätz dabei auf maschinellem Lernen (ML)1.

Zum Beispiel wird heutzutage in der Bildverarbeitung die Objekterkennung typischerweise mit ML angegangen. Solche ML-Systeme lernen mithilfe von Trainingsdaten eine Aufgabe zu lösen. Während die Funktion von Menschen definiert wird, wird die Funktionalität vom ML-Algorithmus selbst erlernt. Das Problem bei ML-basierten Anwendungen besteht nun jedoch darin, dass ihnen derzeit bewährte Methoden zur formalen Verifikation ihres beabsichtigten Verhaltens fehlen. Daher ist es momentan nicht möglich die gleichen Garantien abzuleiten, wie in der klassischen Softwareentwicklung. Das Überwinden dieser Lücke wird mit Hochdruck vorangetrieben, insbesondere von Industriezweigen die ML-Komponenten in sicherheitskritischen Anwendungen einsetzen wollen. Ein naheliegendes Beispiel ist an dieser Stelle die Automobilindustrie, die am automatisierten Fahren auf Basis von ML-Komponenten arbeitet.

Diese Blogreihe widmet sich der formalen Verifikation von ML-Modellen. Dafür stellen wir zunächst das Thema allgemein vor und präsentieren daraufhin einen konkreten Ansatz für neuronale Netze. Jemandem, der sich bisher noch nicht mit diesem Themenfeld beschäftigt hat, stellen sich womöglich Fragen wie:

- Was wollen wir verifizieren?

- Was ist formale Verifikation?

- Warum brauchen wir formale Verifikation?

- Wie verifiziert man neuronale Netze mithilfe von Constraints?

Wenn solche Fragen aufgekommen sind, dann ist das hier die richtige Stelle für ein paar Antworten! Beginnen wir also ohne weitere Umschweife mit den wichtigsten Fragen …

Was wollen wir verifizieren?

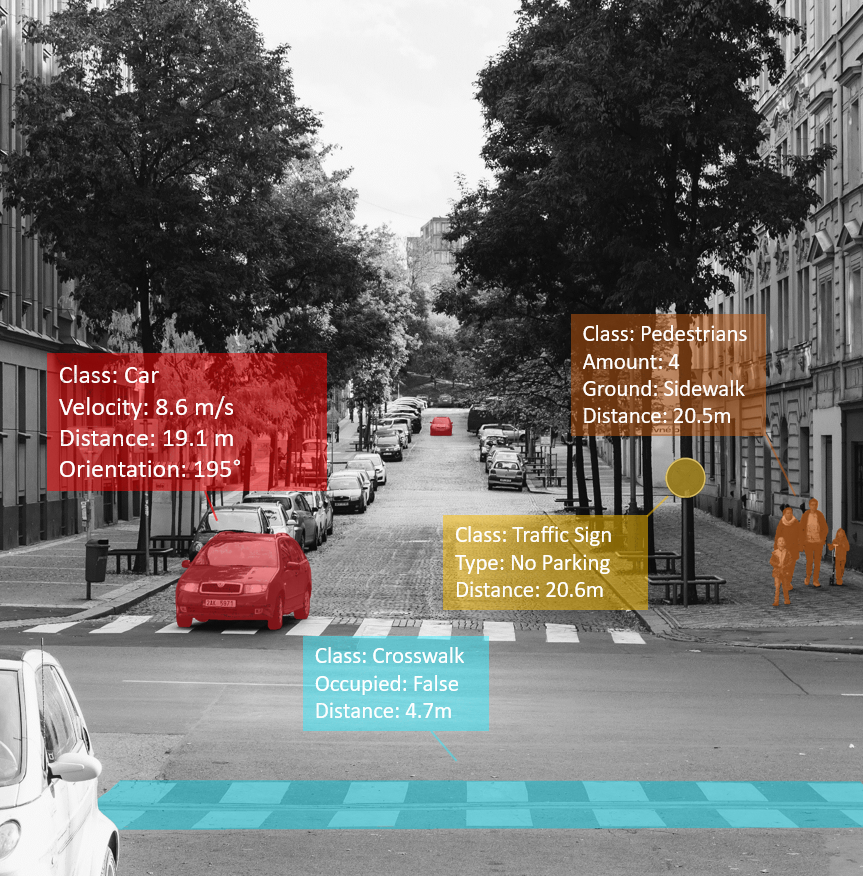

Kurz gesagt, die korrekte Funktionalität von ML-Systemen wie neuronalen Netzen. Genauer gesagt benötigen wir eine formale Beschreibung des beabsichtigten Verhaltens des ML-Systems, einschließlich der Definitionen für Fehlfunktionen und des Eingabebereichs, in dem das System ordnungsgemäß funktionieren soll. Zur Veranschaulichung betrachten wir bereits das Beispiel, welches das letzte Ziel dieser Blogreihe sein wird: ein formal verifizierter Stoppschild-Detektor.

Gehen wir also von einem neuronalen Netz zur Detektion von Stoppschildern aus, das auf einer Teilmenge des German Traffic Sign Detection Benchmark (GTSDB) Datensatzes trainiert wurde, die nur Bilder enthält, auf denen genau ein Stoppschild enthalten ist. Wir erwarten dann, dass dieses Netzwerk Stoppschilder in Bildern wie den folgenden korrekt erkennt:

Die Betonung liegt dabei auf „Bildern wie den hier gezeigten“. Diese Teilmenge des GTSDB ist nur ein Auszug aller Szenarien, die im Straßenverkehr in Deutschland anzutreffen sind. Idealerweise ist diese Stichprobe auch repräsentativ für die Daten, die wir nicht gesehen haben. Daher sollte ein Datensatz das allgemeine Konzept der relevanten Szenarien darstellen. Im ML-Jargon bedeutet dies, dass die Daten ihren Entstehungsprozess abbilden und damit repräsentativ für die Datenverteilung sind, die in der realen Welt angetroffen wird.

Wenn jedoch Daten aus einer anderen Verteilung als Eingabe für ein bereits trainiertes ML-Modell verwendet werden, wäre es gefährlich zu erwarten, dass das Model immer noch einwandfrei funktioniert. Als Beispiel dafür sind im Folgenden Bilder zu sehen, die von dem GTSDB Datensatz konzeptionell nicht repräsentiert werden:

Das korrekte verhalten von ML-Algorithmen unter Domain Shifts sicherzustellen, d.h. Daten von außerhalb der Trainingsdatenverteilung richtig zu verarbeiten, wird durch das Gebiet der Domain Adaptation behandelt, was selbst ein umfangreiches Thema ist. Wir wollen jedoch keine Domain Adaptation durchführen. Dieser kurze Exkurs soll lediglich verdeutlichen, dass wir uns Gedanken über unsere Domäne und insbesondere über unsere Erwartungen an das Verhalten des neuronalen Netzes innerhalb dieser Domäne machen müssen.

In der Praxis müssen wir mit teilweise verdeckten Verkehrszeichen, schwierigen Wetterbedingungen, vielseitigen Beleuchtungssituationen und vielen anderen schwierigen Situationen umgehen. Hier ist zum Beispiel ein Bild aus dem GTSDB-Datensatz mit einem Stoppschild, das selbst für das menschliche Auge ziemlich schwer zu erkennen ist:

Dieses Beispiel ist nicht einmal der schwierigste Fall, den wir erwarten und berücksichtigen müssen. Ungünstige Umstände können es noch schwerer machen Stoppschilder wie dieses zu erkennen. Solche Umstände ergeben sich beispielsweise aus schlechten Wetterbedingungen wie Nebel oder schwierigen Lichtverhältnissen bei Sonnenauf- und -untergang. Daher stehen wir vor der Frage, wie gut ein ML-System für seine angedachte Aufgabe sein muss. Insbesondere wenn ML-Systeme mit Menschen verglichen werden, ergeben sich hier einige Schwierigkeiten. Diese Frage muss für jede ML-Funktion individuell in Abhängigkeit vom Einsatzzweck und den damit einhergehenden Risiken beantwortet werden. Wir wollen an dieser Stelle keine Sicherheitsanforderungen für unseren Stoppschilder-Detektor definieren. Jedoch sollte im Kopf behalten werden, dass ML-Systeme ihre Grenzen haben und wir die Domäne genau spezifizieren müssen, für die wir korrektes Verhalten erwarten.

Damit sind wir wieder bei der Ausgangsfrage „Was soll verifiziert werden?“. Wir wollen beweisen, dass neuronale Netze innerhalb der definierten Domaine wie vorgesehen funktionieren. Für unser Beispiel bedeutet dies, dass das System bis zu bestimmten Schwellenwerten unempfindlich gegenüber Änderungen an den Beleuchtungsbedingungen sein soll. In der Praxis werden solche Schwellenwerte meist aus Sicherheitsanforderungen abgeleitet.

Was ist formale Verifikation?

Formale Verifikation ist der Prozess des Nachweises der Korrektheit von Eigenschaften eines Systems. In unserem Kontext wollen wir das korrekte Verhalten eines neuronalen Netzes in bestimmten Situationen garantieren. Dazu benötigen wir eine formal beschreibbare Hypothese und zusätzlich einen formalen Ansatz, um diese Hypothese zu beweisen oder zu widerlegen. Das Konzept, das wir hier verwenden, ist der „Beweis durch Widerspruch“. Diese Art des Beweises geht davon aus, dass das Gegenteil einer Hypothese wahr ist und versucht diese Gegenthese zu beweisen. Existiert ein Beispiel, das die Gegenthese beweist, so spricht man von einem Gegenbeispiel zur ursprünglichen These. Veranschaulichen wir dies mit einem einfachen Beispiel der natürlichen Sprache mit der These: „Alle Vögel können fliegen“. Die zugehörige Gegenthese ist: „Es gibt mindestens einen Vogel, der nicht fliegen kann“. „Pinguine“ oder „Strauße“ wären damit Gegenbeispiele zur ursprünglichen These und würden diese somit widerlegen.

Für ein eher mathematische Beispiel kann der folgende Ausschnitt ausgeklappt werden, welches bereits ein wenig die Funktionalität des in Teil 2 vorgestellten Ansatzes spoilert.

Für den Widerspruchsbeweis wird eine Gegenthese aufgestellt, die mathematisch gesehen das Gegenteil der ursprünglichen These formuliert. Beweise für diese Gegenthese sind zugleich Gegenbeispiele für die ursprüngliche These. Dies mag auf den ersten Blick unnötig kompliziert erscheinen, in der Praxis ermöglicht dieser Ansatz jedoch ein einfaches Design der gesuchten Gegenbeispiele. Dies wird durch das folgende Beispiel veranschaulicht. Die Vorteile und Funktionsweise dieses Ansatzes werden in Teil 2 näher erläutert:

These:

Für das gegebene Modell eines neuronalen Netzes gilt

f(x)=

\begin{pmatrix}

f_1(x) \\

f_2(x)

\end{pmatrix}=

\begin{pmatrix}

x_1 * \omega_{1,1} + x_2 * \omega_{1,2} + b_1 \\

x_1 * \omega_{2,1} + x_2 * \omega_{2,2} + b_2

\end{pmatrix} mit den Gewichten \begin{pmatrix}

\omega_{1,1} & \omega_{1,2} \\

\omega_{2,1} & \omega_{2,2}

\end{pmatrix} =

\begin{pmatrix}

1 & 4 \\

3 & -3

\end{pmatrix} und Bias \begin{pmatrix}

b_1 \\

b_2

\end{pmatrix} =

\begin{pmatrix}

5 \\

1

\end{pmatrix} existiert keine Eingabe x =

\begin{matrix}

\begin{pmatrix}

x_1 \\

x_2

\end{pmatrix} &

\begin{matrix}

x_1 \in [0, 6]\\

x_2 \in [1, 2]

\end{matrix}

\end{matrix} , so dass f_1(x) \leq f_2(x) .

Gegenthese: Es gibt mindestens eine Eingabe x =

\begin{matrix}

\begin{pmatrix}

x_1 \\

x_2

\end{pmatrix} &

\begin{matrix}

x_1 \in [0, 6]\\

x_2 \in [1, 2]

\end{matrix}

\end{matrix} , so dass f_1(x) \leq f_2(x) .

Gegenbeispiel: x = \begin{pmatrix}

6 \\

1

\end{pmatrix}

Warum brauchen wir formale Verifikation?

Für sicherheitskritische Anwendungen, wie beispielweise das autonome Fahren, sind Standard-ML-Metriken wie eine hohe Genauigkeit auf einem Testdatensatz nicht ausreichend. Neuronale Netze sind hochkomplexe Funktionen, die bekanntermaßen auch sehr volatil sind, vor allem wenn sie nicht richtig entworfen und trainiert werden. Diese Unzulänglichkeit wird von Adversarial Attacks ausgenutzt und wirft folglich berechtigte Bedenken auf. Adversarial Attacks sind Methoden, die darauf ausgelegt sind, Eingaben zu erzeugen, die ein unbeabsichtigtes Verhalten von neuronalen Netzwerken erzwingen. An dieser Stelle wollen wir einen kurzen Überblick über die relevantesten Punkte von Adversarial Attacks geben:

- Es gibt eine große Vielfalt an Ansätzen

- Die meisten der Verteidigungsstrategien richten sich spezifisch gegen bestimmte Angriffe und verbessern nicht unbedingt die Qualität / Robustheit von Netzwerken

- Angriffe sind darauf ausgelegt Netzwerke so einfach wie möglich zu täuschen, aber typischerweise nicht mit realistischen Eingaben

- So ziemlich jedes neuronale Netzwerk kann zuverlässig angegriffen und somit getäuscht werden

Die Diskrepanz zwischen einer hohen Erfolgsquote von Adversarial Attacks und der Tatsache, dass sie nicht unbedingt dazu gedacht sind, realistische Inputs zu produzieren, lässt eine große Lücke entstehen. Wenn wir uns jedoch ML-Systeme ansehen, die Teil sicherheitskritischer Anwendungen sind, benötigen wir genaue Informationen über die Domäne des korrekten Verhaltens. Obwohl Ansätze zur formalen Verifikation in gewisser Hinsicht auch spezielle Arten von Adversarial Attacks sind, sind sie durch ihren Fokus auf realistische Transformationen, die bestimmte Eingabedomänen abbilden, aussagekräftiger. Solche Transformationen sind nicht nur intuitiv aussagekräftiger, sondern minimieren bei richtigem Design auch Domain Shifts, was insgesamt zu einem vielversprechenden Verifikationsprozess führt.

Wofür also haben wir diesen technischen Exkurs zum Thema “Adversarial Attacks” gemacht? Es gibt viele Gesetze, Normen und branchenspezifische Freigabeprozesse, die für die vorgesehene Funktion von Softwarekomponenten Nachweise verlangen. Ohne diese kann auch eine einwandfrei funktionierende Softwarefunktion nicht freigegeben werden, da eben nicht garantiert werden kann, dass sie tatsächlich einwandfrei funktioniert. Wenn es sich um Software handelt, die ML-Komponenten enthält, können solche Beweise sehr schwierig und ohne geeignete Methoden sogar unmöglich sein. Sofern wir also Software betrachten, die neuronale Netze verwendet, muss ein geeignetes Verifikationsverfahren die beabsichtigte Aufgabe der Software und die Funktionalität des neuronalen Netzes berücksichtigen.

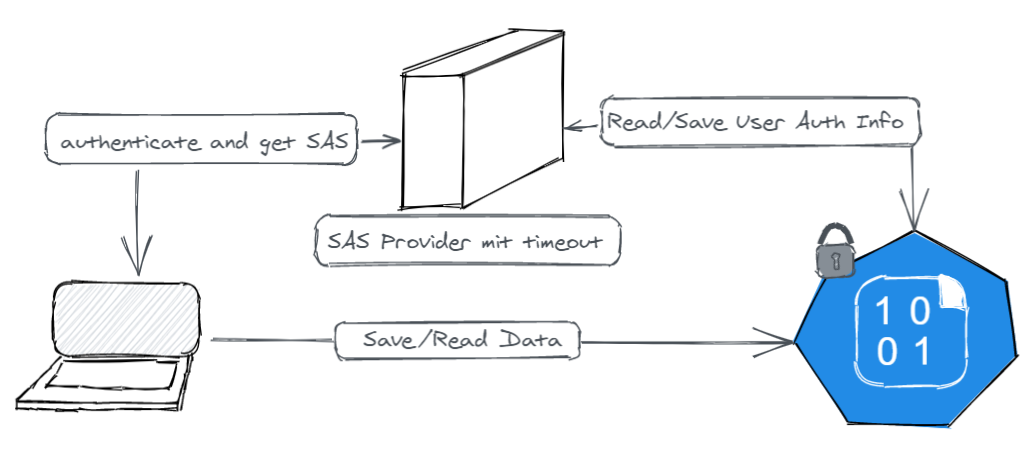

Wie verifiziert man neuronale Netze mithilfe von Constraints?

Der von uns verwendete Ansatz basiert auf dem Konzept des Beweises durch Widerspruch. Da wir zeigen wollen, dass sich ein neuronales Netz korrekt verhält, betrachten wir also die Gegenhypothese, dass es sich innerhalb des Suchraums nicht korrekt verhält. Folglich versuchen wir, ein Gegenbeispiel für richtiges Verhalten zu finden. Existiert kein solches Gegenbeispiel, so ist die ursprüngliche These bewiesen, d.h. das neuronale Netz verhält sich bezüglich der geprüften Eigenschaft sicher. Bei dem vorgestellten Ansatz wird der Suchraum durch ein einzelnes Datum oder einen Datensatz und mindestens eine Transformation, wie Kontrast- oder Helligkeitsänderung, repräsentiert. Die Transformationen bilden spezifische Eigenschaften ab und sind repräsentativ für ein definiertes, erwartetes Verhalten. All diese Informationen werden nun in formal überprüfbarer Form benötigt.

Hierfür verwenden wir Mixed Integer Linear Programming (MILP). MILP-Kodierungen sind im Wesentlichen ein System von linearen (Un-)Gleichungen und Variablengrenzen, die eine geeignete Darstellung für Optimierungs- und Constraint-Satisfaction-Probleme darstellen. Der Vorteil solcher MILP-Kodierungen ist die Verwendbarkeit von ganzen Zahlen und Gleitkommazahlen als Variablen, was eine Darstellung von neuronalen Netzen mit linearen Nebenbedingungen ermöglicht. Wenn wir also verifizieren wollen, dass ein neuronales Netz robust ist, beispielsweise gegen Verdunkelung bis zu einem gewissen Grad, dann müssen wir die folgenden Komponenten in eine MILP-Kodierung übersetzen:

- Der Suchraum, der auf Testdaten und den Transformationen basiert

- Alle Operationen des neuronalen Netzes

- Die Definition von richtigem Verhalten / Gegenbeispiele für richtiges Verhalten

Somit haben wir nun ausführlich die Aufgabe eingeführt „menschlich verständliche Sicherheitskriterien in ein korrektes und vollständiges mathematisches Problem zu übersetzen, das als Beweis für die Freigabe von Software mit neuronalen Netzen als Komponenten geeignet ist“. Im nächsten Kapitel werden wir uns detailliert mit einem Ansatz befassen, der sich dieser anspruchsvollen Aufgabe widmet …

1Typischerweise bezieht sich KI auf Systeme, die sich in Bezug auf eine bestimmte Aufgabe „intelligent“ verhalten, und ML sind die Ansätze/Algorithmen, die Konzepte oder Verhalten aus Daten lernen, d.h. ML wird unter anderem als Teil von KI gesehen. Wir wollen Verwirrung über solche Definitionen vermeiden und verwenden daher ab dieser Stelle nur noch den Begriff ML.

Abbildungsverzeichnis:

Abbildungen 1-8, 11-13: Houben, S., Schlipsing, M., Stallkamp, J., Salmen, J., & Igel, C. (2013). Proposal for IJCNN 2013 competition: The German Traffic Sign Detection Benchmark.

English Version

Part 1: Introduction to Constraint-based Formal Verification of Neural Networks

Nowadays Artificial Intelligence (AI) has proven to be useful in many domains and is even indispensable in some areas like computer vision. However, there are many things to consider if AI is used in safety-critical areas. To give a first impression, the following images show some difficult to detect traffic signs from an ordinary scene of German streets. Additionally, there are slight variations of those scenes, where it is even more difficult to detect some of the traffic signs. We want to verify that such an unwanted behavior, like not detecting street signs properly, can be ruled out within a reasonable domain of illumination conditions like the ones in the images shown below:

While our everyday life is becoming more and more digital, we also increasingly rely on software to work properly even on the hardest tasks. Ideally, this trust is backed by evidence. For software, there are many approved approaches to prove the intended and correct behavior of algorithms. This is necessary for diverse application areas and even mandatory for safety critical applications like autonomous driving. For many use cases machine learning (ML)1 based algorithms are the state-of-the-art approach.

For example, in image processing, object detection is typically approached with ML nowadays. Such ML systems learn to solve a task from training data. While the function is defined by humans, the functionality is learned by the ML algorithm itself. However, the problem with ML based applications is that they currently lack approved methods for the formal verification of their intended behavior. Consequently, with state-of-the-art methods we cannot derive the same guarantees as in classical software development. Closing this gap is being pushed forward with high pressure, especially by industries that want to deploy ML components in safety-critical applications. An obvious example at this point is the automotive industry, which is working on automated driving based on ML components.

This blog series is dedicated to formal verification of ML models. We introduce the topic in general and additionally present a concrete approach designed for neural networks. If you have never dealt with this topic before, the title may already raise some questions like:

- What do we want to verify?

- What is formal verification?

- Why do we need formal verification?

- How do we formally verify neural networks using constraints?

If such questions come to your mind, then you are at the right place. For now, let us start with the most important questions …

What do we want to verify?

In short, the correct functionality of ML systems like neural networks. More precisely, we need a formal description of the intended behavior of the ML system, including definitions for malfunctions and the input domain where the system should work properly. To illustrate this, we look at the example that will be the final goal of this series: a formally verified stop sign detector.

On a subset of the German Traffic Sign Detection Benchmark (GTSDB) data set where always exactly one stop sign is in the picture, a neural network has been trained to detect this stop sign. Therefore, we expect this network to detect stop signs correctly in images like the ones below:

The emphasis lies on “images like the ones shown here”. This set of training data from the GTSDB is just a sample from all scenarios that can be encountered in traffic in Germany. Ideally, this sample is also representative for the data that we have not seen. Hence, a dataset should stand for the general concept of the relevant scenarios. In ML-terms, this means that the data should sufficiently depict its generating process and therefore be representative of the data distribution encountered in the real world. However, if data from another distribution is taken as input to an already trained ML model, it would be dangerous to expect it to still work properly. The following pictures show some examples of stop signs, which are not sufficiently represented by the GTSDB:

Enabling ML algorithms to perform under domain shifts, i.e., handle data from outside the training data distribution, is addressed by the field of domain adaptation, which itself is an extensive topic. However, we are not dealing with domain adaptation in this blog, but this short digression should make clear that we must think about our domain and especially about our expectations about the behavior of the neural network within this domain.

In practice, we must deal with partially occluded traffic signs, difficult weather conditions, various lighting situations and many other tricky situations. For example, here is an image from the GTSDB dataset with a spot stop sign that is pretty hard to detect, even for the human eye:

This example is not even the worst case we have to expect and consider. Unfavorable circumstances can make it even harder to detect stop signs like this one. Such circumstances arise for example from bad weather conditions like fog or difficult lighting conditions encountered at sunrise and sunset. Therefore, we are facing the question of how good an ML system has to be in its intended task, especially in comparison to humans. This difficult question must be answered individually for each ML function depending on its purpose. The important part to keep in mind is that ML systems have their limitations, and we must precisely specify the domain for which we expect correct behavior.

This brings us back to the initial question of “what should be verified?”. We want to prove that neural networks work as intended within the defined search space. For our example, this means that the system should be resilient against influences of different lighting conditions up to certain thresholds. In practice, such threshold values are usually derived from safety requirements.

What is formal verification?

Formal verification is the process of proving the correctness of properties of a system. In our context, we want to guarantee correct behavior of a neural network in certain situations. For that, we need a formally describable hypothesis and additionally a formal approach to prove or refute this hypothesis. The concept we are utilizing here is called “proof by contradiction”. This type of proof assumes that the opposite of a hypothesis is true and tries to prove this counter-thesis. If an example proving the counter-thesis exists, we call this example a counterexample in regard to the original hypothesis.

Let us illustrate this with a simple example based on natural language: the thesis should be “all birds can fly”. Then the counter-thesis would be “there exist at least one bird that cannot fly” and “penguins” or “ostriches” would be counterexamples for the original thesis, thus refuting it.

Unfold the block below for a more mathematical example, which is a little spoiler for the functionality of the approach presented in Part 2.

\textsf{\small {Thesis:}} \\

\textsf{\small {For\ the\ given\ model}}\ f(x)=

\begin{pmatrix}

f_1(x) \\

f_2(x)

\end{pmatrix}=

\begin{pmatrix}

x_1 * \omega_{1,1} + x_2 * \omega_{1,2} + b_1 \\

x_1 * \omega_{2,1} + x_2 * \omega_{2,2} + b_2

\end{pmatrix}\\

\textsf{\small {with\ the\ weights}}\ \begin{pmatrix}

\omega_{1,1} & \omega_{1,2} \\

\omega_{2,1} & \omega_{2,2}

\end{pmatrix} =

\begin{pmatrix}

1 & 4 \\

3 & -3

\end{pmatrix}

\textsf{\small {and\ bias}}\

\begin{pmatrix}

b_1 \\

b_2

\end{pmatrix} =

\begin{pmatrix}

5 \\

1

\end{pmatrix}

\\ \textsf{\small {no\ input}}\

x =

\begin{matrix}

\begin{pmatrix}

x_1 \\

x_2

\end{pmatrix} &

\begin{matrix}

x_1 \in [0, 6]\\

x_2 \in [1, 2]

\end{matrix}

\end{matrix}

\textsf{\small {, \ exists\ such\ that}}\

f_1(x) \leq f_2(x).

\\ \textsf{\small {Counter-Thesis: \ There\ exists\ at\ least\ one\ input}}\

x =

\begin{matrix}

\begin{pmatrix}

x_1 \\

x_2

\end{pmatrix} &

\begin{matrix}

x_1 \in [0, 6]\\

x_2 \in [1, 2]

\end{matrix}

\end{matrix} , \\

\textsf{\small {such\ that}}\

f_1(x) \leq f_2(x).

\\ \textsf{\small {Counterexample:}}\

x = \begin{pmatrix}

6 \\

1

\end{pmatrix}

Why do we need formal verification?

For some safety critical scenarios, like autonomous driving, standard ML metrics like a good test set accuracy are simply not enough. Neural networks are highly complex functions, known to also be highly volatile if not designed and trained properly. This flaw is exploited by adversarial attacks and consequently raises legitimate concerns. Adversarial attacks are methods designed to create inputs that force unintentional behavior of neural networks. At this point, we want to give a brief overview of the most relevant points about adversarial attacks for this blog:

- There is a high diversity of approaches

- Most defense strategies address specific attacks and do not necessarily improve the overall performance / robustness of networks

- Attacks are designed to fool the network as easily as possible, but typically not necessarily with a focus on realism

- Pretty much any neural network can be attacked and fooled quite reliable

The discrepancy between a high success rate of adversarial attacks and the fact that they are not necessarily meant to produce realistic inputs leaves a big gap. However, when we look at ML systems that are part of safety critical applications, we need precise information about the domain of correct behavior. Although approaches for formal verification are also special kinds of adversarial attacks, their focus on realistic input transformations, which represent specified domains of relevant inputs, makes them more meaningful. Such transformations are not only intuitively more significant, but also minimize a domain shift if designed properly, resulting in an actual promising verification process.

So, why did we do this technical digression? There are lots of laws, norms and industry specific release processes, requiring proofs that software components work as intended. Without them, even a perfectly working software function cannot be released, because we cannot assure that it is indeed working perfectly. When we are talking about software that includes ML components, such proofs can be exceedingly difficult and without suitable verification methods even impossible to give. Hence, when we look at software that uses neural networks, a suitable verification method must consider the intended task of the software and the functionality of the neural network.

How do we formally verify neural networks using constraints?

The approach we are using is based on the concept of proof by contradiction. Since we want to show that a neural network behaves correctly, we look at the counterhypothesis stating it does not behave correctly within the search space. Consequently, we try to find a counterexample for correct behavior. If no such counterexample exists, then the original thesis holds true, i.e., the neural network behaves safely with regard to the checked property. With the presented approach, the search space is defined by a sample or a data set and at least one transformation like the change of contrast or brightness. The robustness property that is checked is the invariance of the neural network’s output on the data under the given transformations. For example, classification results stay the same when the luminosity or contrast of the input is changed. Now we need all this information in a formally verifiable form. For this, we are using mixed integer linear programming (MILP). MILP encodings are essentially a system of linear (in)equations and variable boundaries, which are a suitable representation for optimization- and constraint-satisfaction-problems. The advantage of such MILP encodings is the usability of integer and non-integer variables, allowing a representation of neural networks with linear constraints.

Thus, if we want to verify that a neural network is robust, for example against darkening up to a certain degree, then we have to translate the following parts into a MILP encoding:

- The search space, which is based on test data and the transformations

- All operations of the neural network

- The definition of correct behavior / counterexamples for correct behavior

Up to this point we extensively introduced the task to ‘translate human understandable safety criteria into a sound and complete mathematical problem, which is suitable as evidence for the release of software with neural networks as components. In the next article, we are going to look in detail into an approach that performs this challenging task …

1Typically, AI refers to systems that behave ‘intelligent’ in relation to a specific task and ML means approaches/algorithms that learn concepts or behavior from data. This means ML is commonly seen as part of AI, among other things. We want to avoid confusions about such definitions and therefore only use the term ML from this point on.

Table of Figures:

Figure 1-8, 11-13: Houben, S., Schlipsing, M., Stallkamp, J., Salmen, J., & Igel, C. (2013). Proposal for IJCNN 2013 competition: The German Traffic Sign Detection Benchmark.